In this project, I proposed a novel method of temporal feature extraction to improve object detection for time-lapse camera-trap imagery, improving the mAP@0.05:0.95 of YOLOv7 by 24% on new, unseen cameras. This is published to MDPI sensors: https://doi.org/10.3390/s24248002

Methodology

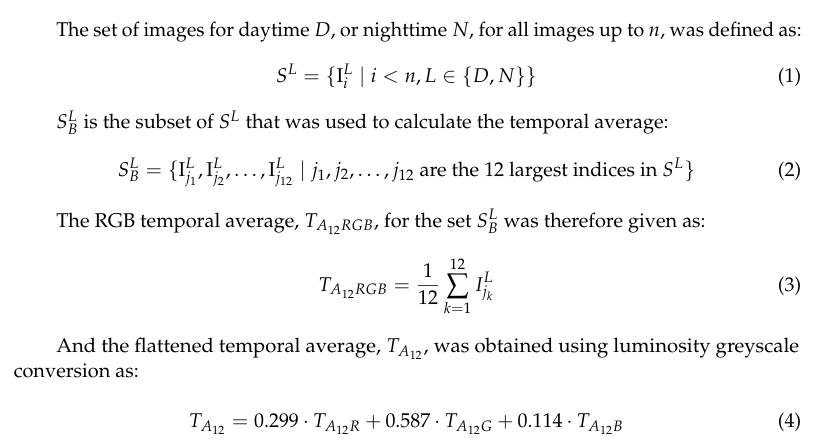

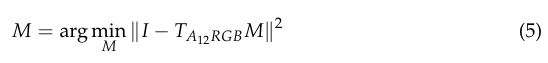

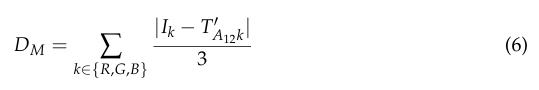

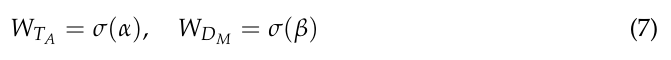

This works by injecting two additional channels alongside the RGB channels: DM and TA12. TA12 is computed as a pixel-wise average across the prior 12 images of day or night, respective of the current image, and is converted to greyscale:

The difference mask, DM, is then computed by first performing colour correction on TA12 before greyscale conversion using least-squares regression, then subtracting this from the input image and computing the mean absolute difference across the red, green and blue colour channels:

We also weight TA12 and DM using a learnable attention weighting which is output through a sigmoid layer:

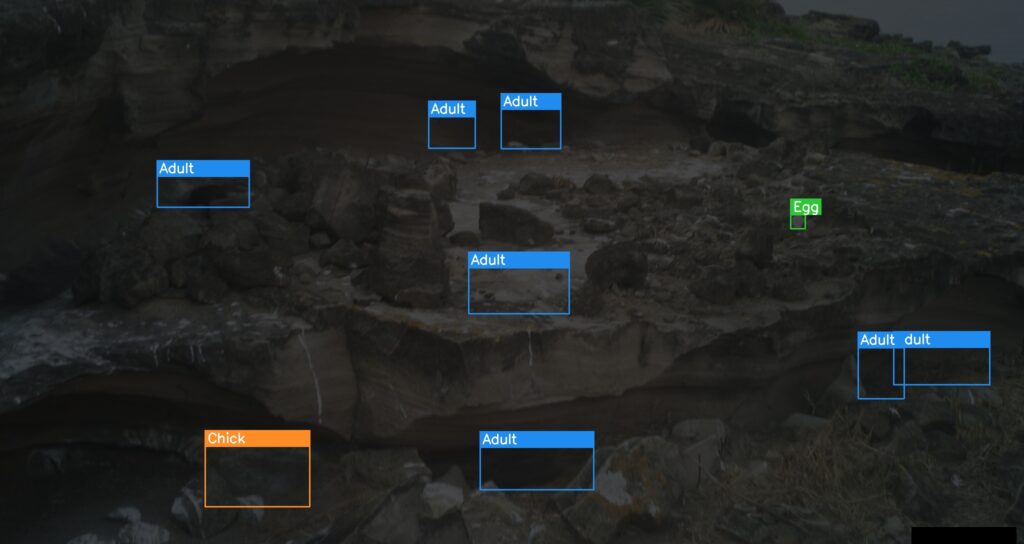

The following figure shows the resultant DM after weighting:

And the next figure shows the TA12 after weighting:

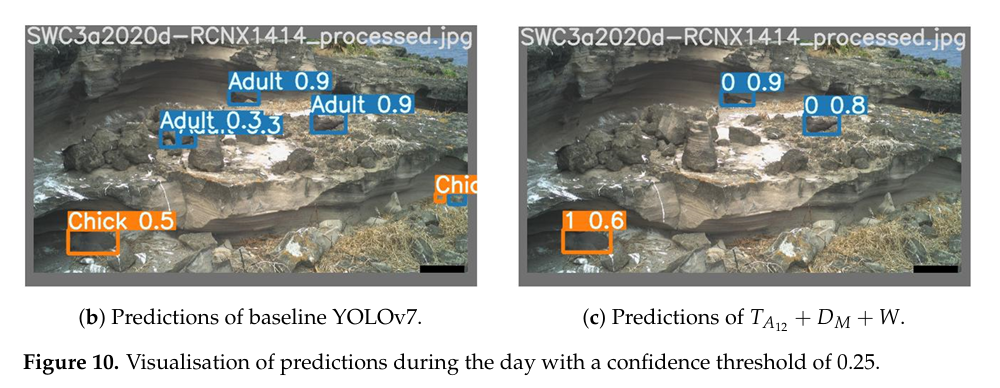

Using this method, we achieved an improvement in mAP@0.05:0.95 of 24% on a dataset of breeding Round Island petrels. The following figure shows a comparison of the predictions of the baseline versus our model.

Leave a Reply