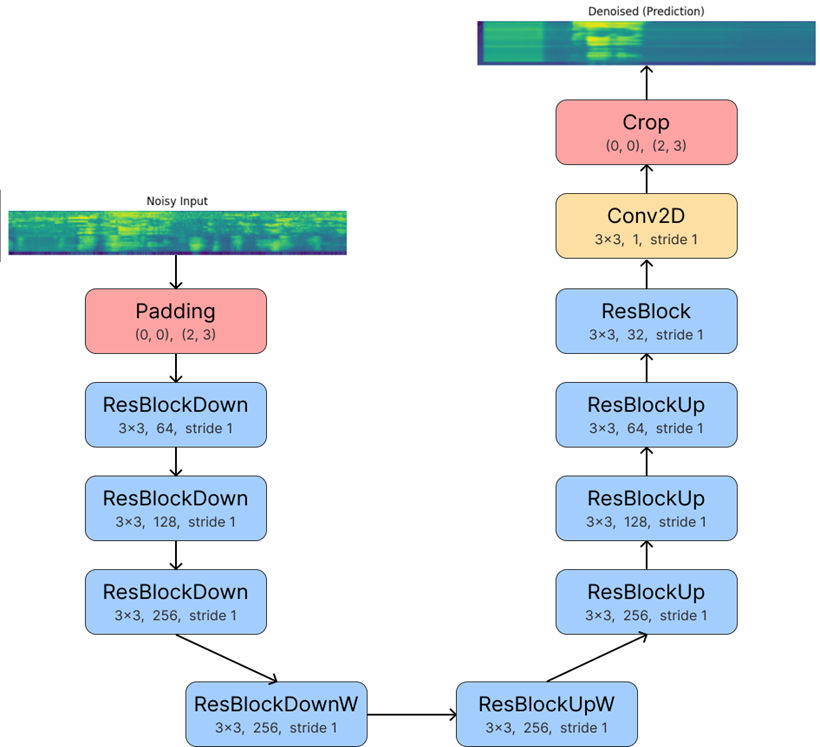

For this coursework, I created a speech utterance classifier using Mel frequency cepstral coefficients and delta/delta-delta features. I also produced a novel denoising autoencoder (DAE) to remove background noise features from the Mel spectrogram before cepstral coefficient and delta/delta-delta feature extraction. The following figure shows the architecture of the DAE. This was trained using reconstruction loss, with the goal of predicting the original sound from the input sound with added background noise.

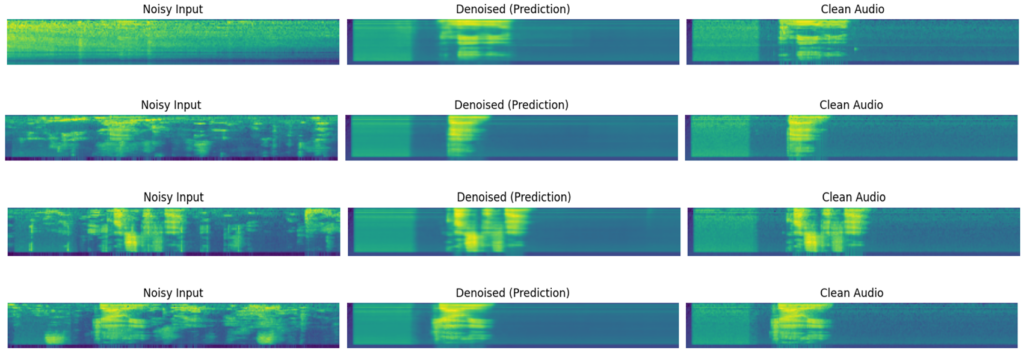

The following figure shows examples of the denoised Mel spectrograms versus the ground truths on the test set.

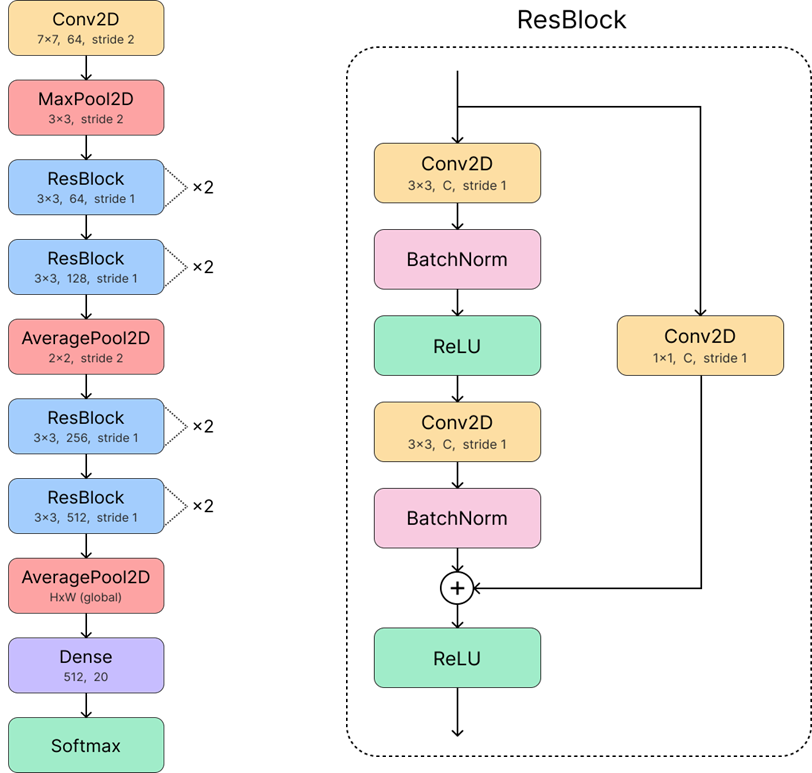

I then devised a modified ResNet-18 architecture for classification of the cepstral coefficients and delta/delta-delta features from the denoised Mel spectrogram. This is shown in the following figure.

Training without the DAE achieved an accuracy of 94.8% on my self-collected dataset for speech utterance classification. In comparison, using the DAE achieved 99.1%. Both were trained on and tested with high levels of background noise.

Leave a Reply